What is Duplicate Content?

Duplicate content refers to blocks of text that are either identical or significantly similar to content found on multiple URLs, either within a single website or across different websites. This can include exact copies or near-duplicates, where content is slightly rewritten but still largely the same. Duplicate content can occur due to various reasons such as URL variations, session IDs, or content scraping. It’s important to note that elements such as headers and footers, which are consistent across a website, do not typically count as duplicate content as they are part of the site’s structural design.

Internal vs. External Duplicate Content

- Internal Duplicate Content: Occurs when similar or identical content exists across multiple pages within the same domain. This can be the result of poor URL management, such as having both “www” and “non-www” versions of pages accessible, or improper use of URL parameters. An example is a single article published twice under different URLs within the same site.

- External Duplicate Content: Happens when content from one domain is replicated on another. This might be due to content scraping or syndication, where content is intentionally shared across different platforms. For instance, if a blog post is republished on another site without a canonical tag pointing to the original source, it creates external duplication.

Impact of Duplicate Content on SEO

Duplicate content can significantly affect search engine optimization (SEO), as search engines strive to present users with the most relevant and unique content. Here’s how duplicate content impacts SEO:

- Confusion in Search Rankings: Search engines may struggle to determine which version of the content is the original or more relevant, leading to lower rankings for all duplicate versions. Google prefers indexing unique pages, which means that duplicate content can lead to missed ranking opportunities and poor user experience as traffic might be directed to less optimized pages.

- Dilution of Link Equity: If multiple pages contain the same content, backlinks may be distributed across these pages, reducing the overall authority and ranking potential of any single page. This self-competition among pages with similar content fragments the link equity that could have consolidated a single authoritative page.

- Crawl Budget Wastage: Duplicate content can consume a website’s crawl budget, limiting the number of unique pages search engines index, which could suppress the visibility of original content. In extreme cases, Google might even refuse to index pages with duplicate content, especially detrimental for large websites like e-commerce platforms with extensive product listings.

Common Causes of Duplicate Content

- URL Variations: Different URL parameters or session IDs can create multiple versions of the same page. Printer-friendly pages or sorting parameters can also cause duplication. Ensuring consistent URL structures and employing canonical tags can mitigate this issue.

- HTTP vs. HTTPS and WWW vs. Non-WWW: Websites accessible through both secured (HTTPS) and unsecured (HTTP) protocols, or with and without “www,” can lead to duplicate content issues. Proper redirects and consistent linking practices can help maintain a single version of a page.

- Scraped or Syndicated Content: Content copied from one site and published on another without proper attribution can create duplicates. Even legitimate content syndication, if not managed correctly, can lead to SEO issues. Using canonical tags in syndicated content helps point search engines to the original source.

- Product Descriptions: Especially common in e-commerce, where similar product descriptions are used across multiple sites, leading to a lack of unique content. Creating distinctive product descriptions for each platform can help differentiate content and improve SEO performance.

Addressing Duplicate Content Issues

Identifying Duplicate Content

- Site Audits: Use tools like Google Search Console or specialized SEO software to identify duplicate content within your site. Regular audits can help detect and resolve issues before they impact search rankings.

- Exact Match Searches: Perform a Google search with exact phrases from your content in quotes to find external duplicates. This can highlight unauthorized use of your content on other websites.

Solutions for Duplicate Content

- Canonical Tags: Use the

rel=canonicaltag to indicate the preferred version of a page to search engines, consolidating link equity to the canonical URL. This is especially useful for managing content syndication and product pages. - 301 Redirects: Implement permanent redirects from duplicate pages to the original content to consolidate authority and prevent visitors from accessing duplicates. This technique effectively handles multiple URL variations.

- Noindex Meta Tags: Use

noindextags on duplicate pages to prevent them from being indexed by search engines, while still allowing them to be crawled. This can help manage duplicate content that must remain accessible for usability but should not appear in search results. - Consistent URL Structure: Ensure consistent usage of URLs across your site, avoiding unnecessary variations in parameters or structures. This includes maintaining uniformity between HTTP and HTTPS, and “www” and non-“www” versions.

- Unique Content Creation: Focus on creating unique and valuable content, especially for product descriptions and meta tags, to differentiate your pages from others. This is crucial for maintaining a competitive edge in search rankings.

Monitoring and Maintenance

Regularly monitor your website for duplicate content issues, especially after major changes or updates. Use tools like Google Search Console to track indexed pages and address any newly identified duplicates promptly. Staying proactive ensures that your site maintains its search visibility and authority.

Duplicate Content in Affiliate Marketing

In the realm of affiliate marketing, duplicate content can be particularly challenging. Affiliates often use manufacturer descriptions or content provided by the parent company, leading to multiple sites with identical content. This can dilute the effectiveness of affiliate pages in search engine rankings. Therefore, affiliates should strive to create unique content that adds value, such as personalized reviews or additional insights, to stand out from competitors and improve SEO performance.

Conclusion

While duplicate content is a common issue, it is manageable with the right strategies and tools. By understanding its impact on SEO and taking proactive measures to address it, website owners and affiliate marketers can ensure their content remains visible and authoritative in search engine results. Consistently applying best practices for managing duplicate content will help maintain your site’s SEO health and enhance its competitive positioning online.

Frequently Asked Questions

How can I fix duplicate content issues?

Some ways to fix duplicate content issues are using 301 redirects and the rel=”canonical" tag to point search engines to the preferred version of a page.

How can I check for duplicate content?

There are many ways to check for duplicate content such as using third party tools to check for plagiarism and duplicate pages on a website, and searching the content on Google to see if it appears elsewhere on the internet

Is duplicate content bad for SEO?

Duplicate content can be bad for SEO because it can confuse and make it difficult for search engines to determine which version of the content is most relevant to a given search query. Additionally, if your website contains a lot of duplicate content, it could be penalized by search engines.

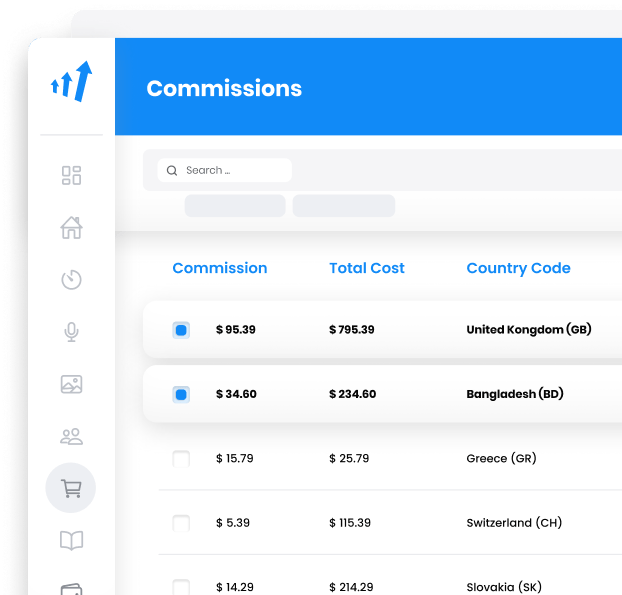

Discover the role of an affiliate in marketing—earn commissions by promoting products through banners, links, and campaigns. Learn how affiliates drive sales and explore effective marketing strategies. Join the world of affiliate marketing with Post Affiliate Pro for user-friendly tools and exceptional support.

Discover the power of direct linking in affiliate marketing with Post Affiliate Pro. Learn how this simple, effective SEO technique can boost your Google rankings and simplify affiliate management. Explore the pros and cons of direct versus indirect linking and why it might be the right choice for your strategy.

Discover the power of redirects with Post Affiliate Pro's comprehensive guide. Learn about 301, 302, and Meta Refresh redirects, their purposes, and configuration differences. Enhance your site's SEO with 301 redirects to preserve rankings and pass on link equity. Understand when to use each type for optimal user experience and technical setup. Explore more to boost your site’s performance!